Mental Models to Improve GMAT Critical Reasoning Strategy

While this is ostensibly a guide for Critical Reasoning, many of the things listed here will help us to make better decisions on the rest of the GMAT and ideally in life as well. That last part is up to you, obviously, but I can help you with the GMAT stuff.

One of the great things it’s necessary to understand is the sloppiness in how we normally think. For the most part, we’re not reasoning every incident from scratch–in other words, there’s no point in trying to figure out that “fire burns” the hard way every single time.

There are two very simple ways that we can learn to improve our logic.

First, the basic processes that are used to analyze a situation are what we can call Mental Models; Shane Parrish at Farnam Street has created a substantial list of these models, of which his General Thinking Concepts are tremendously useful for GMAT purposes. We will address these at length. These models–particularly that of Inversion–can help us analyze a situation to propose possible solutions as well as to identify possible drawbacks or reasoning errors.

Second, the best way to know whether we’ve been caught out is to have a robust catalogue of Logical Fallacies. If we are aware of common thinking traps, then we will be more likely to recognize them when they appear and therefore, one would hope, much less likely to be fooled by them. We’ll address those in a later post.

When we can see the error in the argument, we only need to find the answer choice that preys upon this error. That is, of course, easier said than done. But hey, we gotta start somewhere…

Chapter 1: The Map is Not the Territory

Or, as Alan Watts liked to say, “the menu is not the meal.”

Think about this: every explanation, no matter how detailed, is a reduction from the original. No matter how lifelike, it’s a painting of a sunset, not the sunset itself.

Is being reductive necessarily a bad thing? Probably not. Being reductive is how we learn things. What would be the point of a 1:1 scale map? It certainly wouldn’t prevent anyone from getting lost in the terrain the way a smaller, less detailed one might.

So there is a use for reducing things. The question is how much use, and whether the reduction is applied appropriately.

Let’s say we have a leader X who claims all Y people are Z (not mentioning any names, of course). This conveniently removes from the map all the Y-people who are successful, hard-working, and educated, and neglects the socioeconomic factors that may have encouraged the Y-people to behave in ways that might be interpreted as Z-like.

Furthermore, this also removes from the map all reasons that one might behave in Z-like ways that do not necessarily indicate Z-ness as a moral characteristic.

In short, as shit gets real it has an unfortunate tendency to get more complex than one might like to admit. In fact, it is arguably more complex than we are actually capable of comprehending.

There are nearly always competing explanations. In fact, despite Occam’s Razor (see Chapter 8), black-and-white simplicity often suggests a poor, misleading, or at best misguided read of the situation.

Now you’re probably wondering what I’m yammering about. How does this have anything to do with GMAT Critical Reasoning strategy?

This:

Don’t assume the convenient explanation (usually the one provided in the argument itself) is sacrosanct. Always ask whether there’s a better or competing explanation that would work just as well.

Chapter 2: Circle of Competence

Parrish makes a point that this is an issue of understanding that one is an expert in one’s own field, but perhaps not in other disciplines. Furthermore, the specialization to which we are all subject may actually give us blind spots as we attempt to move forward in any way.

The key, suggests Parrish, is to be conscious of the boundaries of what one knows. Given that we’re walking into the GMAT as generalists, this doesn’t seem that it would really apply.

Or does it?

Let’s look at it from a slightly different perspective: the most important thing for us to be aware of is actually the boundaries of the argument. We need to be spectacularly clear on what we know, which gives us a bright line to distinguish what we don’t know.

It is vitally important to catalogue what we are told and its implications–particularly conscious of what it does not imply. After all, we can’t make statements or assumptions about what we don’t know, particularly when the argument is designed to make us do so.

In short: be careful what you read, and make sure never to read more into the argument.

What’s vitally important, in fact, and this goes for Reading Comprehension as well, is that you focus on the WHAT of the problem rather than the WHY. As Storytelling Animals, human beings love to attribute motivations to things.

Make sure you stick to the facts: that is, don’t pick a “likely story” at the cost of the logic.

Chapter 3: First Principles

This might actually be even better for Quant than it is for Verbal. This is the way to think like a physicist and really take things down to the core essence of the question.

Look at it this way: it was impressed upon me as a Physics undergraduate that all Classical Physics (up to but not including Quantum Mechanics) can be derived from Newton’s Second Law. Talk about a First Principle!

Bearing that in mind, someone–me, I’m assuming, but hopefully someone else as well–has probably told you that it’s pointless to memorize a formula that you can’t derive.

Formulas are time-saving devices, not thinking-saving devices. Don’t be a fool.

ANYHOO, you’re probably familiar with me complaining about memorizing formulas throughout the Quant GMAT Guides. The point is this: First Principles are things that we can’t realistically reduce because they are effectively just different ways to frame definitions.

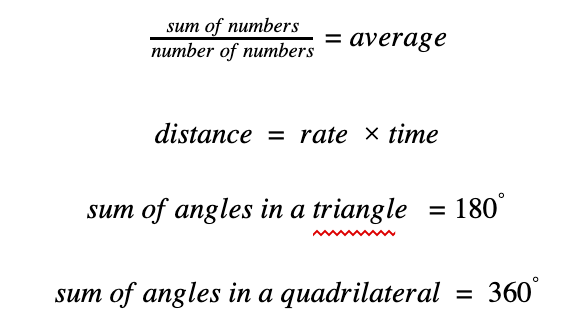

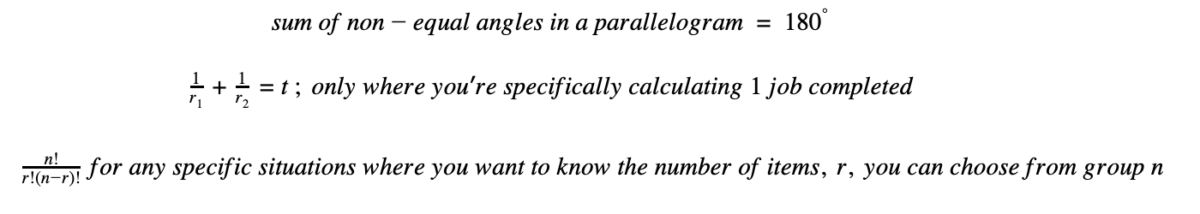

First Principles, then, are similar to axioms in the sense that we must take them to be true in order for everything else to be true. That would include things such as these:

Second-order principles would be adjustments of such things to match particular problems. These might be useful in certain circumstances, but they would hardly be applicable in any situation.

This would include things such as:

At the risk of losing the plot at the expense of pithiness, let’s just say that second-order formulae aren’t necessary if we know the First Principles.

That is, at the worst, we can derive any second-order phenomena from the first principles, so we might as well just forget it.

Chapter 4: Thought Experiments

Chapter 3: Thought Experiments

This is probably as simple as it sounds, or hopefully it is. A Thought Experiment is simply being able to imagine a situation with a certain set of constraints in order and say “what if?”

This allows us to consider situations that would be ridiculous, stupid, impractical, or realistically impossible and still determine their validity in the real world.

For example, for someone with less practical and more theoretical inquisitiveness, my experiment at age twelve or so with “what would happen if I tip this raspberry candle into a spinning fan in the middle of the living room” might better have been worked out as a Thought Experiment.

Similarly, things that would be feasible but arguably cruel, like putting a cat (why not a Chihuahua?) in a box with a vial of poison controlled by the half-life of a decaying radioactive isotope are covered by the whole Thought Experiment thing.

Obviously it’s possible to go overboard here: the old academic philosophy yawn about Planet XYZ that is full of people exactly like us in every cellular respect yet who are not conscious but for all intents and purposes seem to be, yadda yadda yadda… yeah, I know. Some people get paid for this stuff. Then again, some people are paid to teach GMAT, so who wins?

Here’s where it’s useful for the GMAT Critical Reasoning Strategy:

Thought Experiments allow us to see the things that aren’t working in arguments that might otherwise seem sound. All we need to do is to follow the bones of the argument’s structure, while changing its content to be so ludicrous that it would never work.

In other words: 1) find an analogous case; 2) make it as ludicrous as possible.

This allows us to see potential flaws in the problem. This is, so I am told, the same thing the philosophy guys are doing on Planet XYZ. They’re just being more obtuse about it.

Let’s look at this circumstance for a moment, which we won’t dress up or identify the error within:

On any given day, more than 10,000 teenagers are absent from school and crime rates are highest during the hours that these teenagers should be in school. Therefore, it is these same teenagers who are responsible for the high crime rates.

Let’s abstract this a bit first: we have an absent group of people and a crime committed. Because we cannot account for the whereabouts of this group of people at the time of the crime, we conclude that this group is responsible for the crime. With me so far?

Now, let’s turn the dials a bit and see what we get:

On any given day, my Great Aunt Gladys plays bingo at 12pm sharp. Today she was not at bingo and a liquor store was robbed on the other side of town. Therefore, my Great Aunt Gladys robbed the liquor store.

That is the same argument, kids. It’s not like we’ve done anything but change the cast of characters, and you just assume that because my Aunt Gladys is 86 that she couldn’t possibly be responsible. You have obviously never met my Aunt Gladys.

The fact that this is extremely unlikely points out the problem with the argument. We’re associating correlation–where two things happen at a similar time–with causation–where one thing causes another thing. More on this later, when we discuss Common Fallacies.

The fact of the matter is that it’s helpful to think about different situations that match the same structural template. If there’s a flaw in the structure, then the flaw will be retained in the new situation but might be made more evident because of context.

In other words, you’re creating a functional analogy for the argument. It’s just that Thought Experiment sounds classier, like something Einstein or Schrödinger would use.

The sillier the better. That’s the whole point. Remember, it’s like saying “it’s like saying…”

Chapter 5: Second-Order Thinking

Why does this have to be so fucking hard for people to understand?

Not specific to you–just in general. It’s the reason that proud vegans can’t understand why I don’t like to eat meat (hint: it doesn’t have to do with killing animals); it’s the reason that your drunk uncle thinks that snowfall proves that Global Warming is a Lie!; it’s the reason that people who don’t understand exponential growth think masks are pointless (or, presumably, why Grandma is dead).

Yes, I’m talking about that immemorial, world-over bane of the stupid: Second-Order Thinking.

Second-Order Thinking basically means that we need to consider not just the immediate effects of what we’re doing–but also the second-order effects on our community or environment.

It probably seems a bit strange to consider what happens outside our immediate influence, but this is actually a situation where the pandemic dead horse can be usefully flogged.

What we have to worry about, assuming that we are reasonably healthy and lacking comorbidities, but whether us carrying the bastard disease will cause problems for others down the line who might potentially be affected due to contact with us.

There are always effects that might appear down the line–whether anticipated or not!–so it’s generally useful to ask whether you’re failing to consider such things.

Chapter 5: Probabilistic Thinking

I mean, well, this should be obvious.

That’s the idea, of course, but it doesn’t illustrate how difficult the reality of Probabilistic Thinking really is. After spending a year doing the weather for a local radio station and then writing a dissertation on Quantum Mechanics, I’m willing to admit that probability is often a hard one to grasp.

Probability is on one hand a stroke of genius: it allows us to consider the likelihood of certain events coming to pass, which gives us a bridge between inductive and deductive logic. (To give it short shrift: inductive means “educated guesses” while deductive is like an algebra problem–you just rearrange information that’s already there to figure out what x is.)

That is, probability gives us a way to quantify educated guesses, which is actually pretty badass.

On the other hand, that means we are stupid enough to quantify educated guesses. One of the major fallacies that we will cover later is that the Past Does Not Predict the Future. That’s precisely what probability is trying to do.

What’s the solution? Honestly, it’s just not to take this stuff too seriously. Just as we don’t actually know for certains that the sun will in fact rise tomorrow–if you do, you are a fool with no idea how many crazy people there are whose stubby little fingers are too close to their respective Big Red Buttons–we won’t know the actual outcome of a probability calculation until after the fact.

The way to prove probability is with empirical evidence. Yet at that point, it’s no longer probability because we already know the outcome.

There’s a damn good reason that probability sounds a lot like gambling: because, although its absolute origins are murky, if it didn’t arise directly from gambling it was definitely mostly developed because of gambling.

That doesn’t mean that we can’t get some use out of it, though.

The thing with probability is that it forces us to look at different sides of an issue. After all, if there’s a 90% chance of X happening and a 10% chance of Y happening, we still need to understand the implications of Y as much as we do the implications of X. One could argue that it is “less necessary” to do so, but to ignore it completely would be absurdly risky.

That is a bit like saying, “What do we do if our unborn baby is left-handed?” “Oh, I hadn’t considered that unfortunate eventuality. I guess we’ll just leave it in the dumpster.”

How this applies to the GMAT Critical Reasoning strategy: while Parrish argues that knowing the most likely outcome helps make better decisions, I would argue that GMAT Critical Reasoning sails a somewhat more perverse tack. Rather, it is useful for us to think about the weird, the seemingly paradoxical, or the boundary cases because this is what normally tends to get overlooked.

It’s also a place where Critical Reasoning is very similar to Data Sufficiency: always make sure to test the boundaries–diving for the middle is for the punters. Probabilistic thinking helps us to determine what those boundary cases actually are.

Chapter 7: Inversion (Reduction to Absurdity)

Parrish defines this as, roughly, starting at the end rather than the beginning, sort of like knowing the climax of a movie and working your way back to the setup. That’s all well and good, but I take a minor exception to that.

In short, I believe that it’s far too easy to engineer a situation to make the climax inevitable. We’re all storytellers who can convince ourselves of some pretty absurd things–e.g. politicians have our best interests at heart, government pensions will exist when we’re older, or that our cats aren’t plotting to murder us in our sleep–so it’s too damn easy to start from the end and figure out a beginning that actually works surprisingly well.

Rather, I’ll take Parrish’s idea of Inversion and move it one step beyond by assuming that we start at the end and applying a Reduction to Absurdity.

This is usually best achieved by applying the Reduction to Absurdity, which is of course already dealt with in the ASSUMPTIONS chapter. Remember: NEGATE THE CONCLUSION and keep the facts consistent.

If an animal is a chicken, then it is a bird. However, it would be incorrect to say that if an animal is a bird, therefore it is a chicken. We can negate CHICKEN and still get animals that are birds: e.g., if an animal is NOT a chicken, it is a turkey.

In other words, to start at the end of the argument, it’s most convenient to assume that the conclusion is actually NOT TRUE while the facts remain true. In that case, we can see how the argument would work if turned on its head.

Remember, nothing says that the conclusion must be true, but if we see that the argument is strong enough that there is no reasonable way we can negate it–even turned around–then we have a pretty strong argument.

If an animal is a chicken, then it is a bird whose name is part of the acronym used by the fast-food chain “KFC.” If I turn this around, I get If an animal is a bird whose name is part of the acronym used by the fast food chain “KFC,” then it is a chicken.

Luckily, you probably won’t have to deal with arguments that strong on the GMAT, so the old Reduction works wonders.

Note: apparently “KFC” is apparently the legal name of the business now, and although it might seem that this implies that the letters have no meaning, the abbreviation still refers to “Kentucky Fried Chicken.” I can’t even begin to unpack the logic of this, and I’m not sure even Saul Kripke would have a good answer. Not worth trying.

Chapter 8: Occam’s Razor

The reason we need to know about Occam’s Razor is that we need to learn to avoid it. Occam, bless his heart, was trying to get people out of conspiratorial thinking, but he ended up with a staggeringly blunt little tool (yeah, yeah, I get it…).

The Razor, as it were, means simply to suggest that the easiest explanation is the best explanation. In other words, why meander when there’s a straight-line path? An example would be: there’s no reason to assume that Elvis and Tupac faked their deaths and now currently live together on a private Caribbean island when it’s probably just simpler to assume that they were both victims of, er, “lifestyle.”

That’s all well and good, and it seems that it would make reasonable sense in real life–after all, most of the time a simple explanation probably is the best way to look at things.

However, that doesn’t necessarily work well on the GMAT. The simplest explanation is probably the most obvious or the most “aim for the middle” sort of explanation, but we really need to think about what might potentially go wrong. We need to look at the boundaries–the outside of the argument–to see what could ultimately pose a problem.

In that sense, leave Occam’s Razor to the punters and remember that the simplest way to complete a GMAT argument is usually what they’re expecting you to assume–and for that very reason serving to gloss over the actual error in the argument.

Chapter 9: Hanlon’s Razor

In short, this one is “never attribute to malice what can reasonably be attributed to incompetence, self-interest, etc.”

This Razor is vastly more useful than poor little Occam’s in GMAT and life, rare be it that the two might intertwine.

The way to apply this to GMAT Critical Reasoning strategy: stop it with the conspiratorial thinking! One person is not trying to get one over on the other guy. Stop attributing stories and motivations to people. It will not get you points on the test.

Sure, these things happen in real life. (Particularly where you’re from).

They don’t, however, happen on the GMAT. Divining people’s incentives is a losing battle, particularly given how poorly aware most people are of their actual motivations.

As far as the GMAT is concerned, only cool, rational, evidence-based information will move the needle. No one with a vested interest is going to be a correct answer.

For that matter, no answer choice with a “should”–or any other moral judgment–will ever be a correct answer. Calm, factual, logical. Keep it together, FFS.

Chapter 10: Framing

Now this is one that Parrish doesn’t actually list, but which comes most traditionally from communications training and/or alternative psychology such as NLP.

“Framing” probably makes a bit of intuitive sense, but in a technical sense it refers to defining the boundaries of the argument, which can have a great effect on how the argument is actually interpreted.

For GMAT Critical Reasoning strategy purposes, it’s important to remember that “scope” and “frame” mean essentially the same thing. Scope for Critical Reasoning questions is invariably defined by the conclusion of the argument. Remember that any answer that doesn’t directly fit the conclusion of the argument is not going to be the correct answer.

That is, remember that certain facts may present themselves and be interpreted in different ways; in other words, certain conclusions may be drawn from these same facts. This is just another way to say that the conclusion frames the argument, and that by changing the conclusion, we can change the focus of what the argument is trying to say.

If the conclusion frames the argument in a stupid way, we’re much more likely to believe stupid arguments. For this reason, it’s always helpful to bear in mind that the facts must be treated as sacrosanct: facts always true, but conclusion often false.

Or just do it the quick ‘n’ dirty way: if you equate “frame of the argument” and “scope,” recognizing that this is defined by the boundaries of the conclusion, you’ll be fine.

Chapter 11: Opportunity Cost

Another bonus for us, also outside of the scope of Parrish’s book, but definitely dealt with in Gabriel Weinberg’s Super Thinking.

Opportunity cost is simply a way to consider the things that we’re sacrificing in order to achieve a certain goal. This often assumes some sort of zero-sum system, of course, but not necessarily.

It’s probably best illustrated by example. Let’s say that we have a system where A and B are in inverse proportion to one another: that is, more A equals less B, while more B equals less A.

For example, the more cats we have, the fewer mice we have, and vice-versa.

Now let’s assume that we increase the number of cats. This implies that we would have fewer mice, potentially to the point where the mice go extinct and we firmly have zero mice. This might make sense on the surface, but it actually does assume at least a couple of things.

First, it assumes that the system must stay in equilibrium. It’s entirely possible that we can allow the system to completely disrupt; for example we might increase the number of cats while tweaking some unknown variable that allows the mice to run rampant, overwhelming the cats.

Second, it assumes that what is good for the goose is good for the gander (or, in this case, what is good for the cat is good for the mouse); in a situation where nuclear fallout affects both populations, fewer cats does not necessarily imply more mice.

Third–a much healthier way to look at the system–is to realize that the boundaries of the system are not necessarily static, but to look at the variables relative to each other.

If a train company increases the speed of its trains, this seems good on the surface. Obviously journey time will decrease. However, if the amount of mechanical service that the individual trains require actually increases significantly, then this could easily overwhelm the advantage gained by faster journey times because the trains simply can’t run as frequently as before because they’re always in the depot getting fixed.

In other words, don’t rob Peter to pay Paul. Or if you do, just realize what you’re doing and give Peter a nice, reliable IOU. I know you’re good for it.